Recently I encountered some VM refresh issues in View I felt would be helpful to share. Using the View administrator I attempted to refresh a couple of linked clones manually. Some of the refresh operations completed successfully, but some did not. Upon investigating the recorded View events I found the below.

Next I moved onto checking the the vSphere datacenter logs that housed the linked clones and I found the checkpoint.vmdk was unable to be deleted. The VM was deleted and some of it’s associated files were removed although some were not.

I successfully deleted most of the files through the datastore browser but the VM-Name-checkpoint.vmdk and VM-Name.vmdk were locked. I SSHed into each host in the cluster to determine which host held the lock. I used the command:

vmkfstools -D /vmfs/volumes/DATASTORE-NAME/VM-FOLDER/VM-NAME-checkpoint.vmdk

Below is the output. For more information about investigating files locked by ESXi click HERE.

~ # vmkfstools -D /vmfs/volumes/DATASTORE-NAME/VM-FOLDER/VM-NAME-checkpoint.vmdk

Lock [type 10c00001 offset 63735808 v 2894, hb offset 3915776

gen 43, mode 0, owner 00000000-00000000-0000-000000000000 mtime 3240812

num 0 gblnum 0 gblgen 0 gblbrk 0]

Addr <4, 116, 145>, gen 2786, links 1, type reg, flags 0, uid 0, gid 0, mode 600

len 331, nb 1 tbz 0, cow 0, newSinceEpoch 1, zla 2, bs 65536

~ #

Normally when a file is locked by a host the end of the owner value highlighted in red should be the MAC address of the host that locked the particular file. The mtime field shows how long before a lock expires. Monitoring the mtime field gives you an indication if the lock is getting updated or not. If you see it decrease that means the lock is counting down, up the lock is increasing. In my situation each time a lock occurs the owner value is all Os, and the mtime is not counting down. What is particularly strange about this is each host I ran the command on reported the same thing. All 0’s in the owner field implies that the host which ran the vmkfstools command owns the lock. It could also mean no host owns the lock, but it seems you would need to take in account the mtime and mode field to form that assertion. More about vmkfstools can be found HERE.

Mode types

mode 0 = no lock

mode 1 = is an exclusive lock (vmx file of a powered on VM, the currently used disk (flat or delta), *vswp, etc.)

mode 2 = is a read-only lock (e.g. on the ..-flat.vmdk of a running VM with snapshots)

mode 3 = is a multi-writer lock (e.g. used for MSCS clusters disks or FT VMs).

After checking each file on each host all report 0s for the owner, the mtime is the same, and the mode is 0. For a few VMs simply waiting resolved the issue. The down side to this is you could be waiting a few minutes, hours, or days for the lock to expire. Continuing to troubleshoot I SSHed into a host, and ran the below commands.

cd /vmfs/volumes/DATASTORE-NAME/VM-NAME/

cp VM-NAME-delta.vmdk VM-NAME-delta.old.vmdk

mkdir oldvmmdk

mv VM-NAME.vmdk oldvmmdk/

ls -l

cp VM-NAME-delta.vmdk VM-NAME-delta.old.vmdk

ls l-

rm -f VM-NAME-delta.vmdk

First I changed the directory to the Datastore, then the VM folder. Once in the appropriate folder I tried renaming the VM files then I again tried to delete them which failed. I made a sub folder called oldvmdk and tried moving the VM files into the new folder. That succeeded despite being unable to delete the files. Now that the files are in the subfolder I tried to delete them once more which failed.

As I stepped through the commands I also checked the VM folder permissions with the “ls -lah” command. The permissions were correct so I could rule that out.

/vmfs/volumes/DATASTORE_GUID/VM-NAME/ # ls -lah

drwxr-xr-x 1 root root 420 Nov 4 13:22 .

drwxr-xr-t 1 root root 22.3K Oct 15 07:54 ..

-rw------- 1 root root 6.5G Oct 30 19:49 VM-NAME-checkpoint-delta.old.vmdk

Then I turned to checking the logs real time while I attempted to delete the files. The way I did this was to open two Putty sessions to the same host. On one of the sessions issue the below commands to pipe out all activity surrounding the problem VM. In the other Putty session issue the “rm -f VM-NAME-delta.vmdk” command.

esxcli vm process list |greg -i VM-NAME

ps |grep vmx |grep -i VM-NAME

cat /var/log/vmkernel.log |greg -i VM-NAME

cat /var/log/vmkwarning.log |greg -i VM-NAME

cat /var/log/hostd.log |greg -i VM-NAME

tail -f /var/log/vmkernel.log

As the file fails to delete it should pipe out useful information. I found the below output and this time it did return an owner!

2013-11-04T13:48:45.095Z cpu11:5454643)FS3: 1393: vol 'DATASTORE_NAME', lock at 173475840: [Req mode: 1] Not free:

2013-11-04T13:48:45.095Z cpu11:5454643)[type 10c00004 offset 173475840 v 158, hb offset 3338752

gen 4307, mode 1, owner XXXXXXXX-XXXXXXXX-XXXX-3c4a92f61680 mtime 2278287

num 0 gblnum 0 gblgen 0 gblbrk 0]

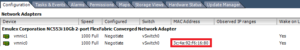

Again, the tail end of the owner field highlighted in red is the MAC address of the host holding the lock. To confirm the host simply go to the Configuration tab then the Network Adapters tab on each ESXi host until you find the culprit.

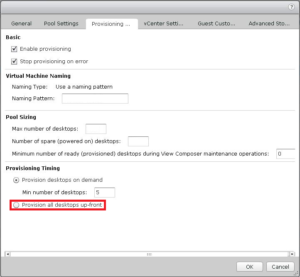

From that host you will be able to delete the files. You should also vMotion any VMs off and just give the host a reboot. Once resolved however, if your pool is configured to provision desktops on demand you won’t know if the issue is truly resolved until the VM is needed. Per the screenshot below configure the pool to provision all desktops up-front and watch the provisioning complete. Once your new VMs are provisioned you can flip your pool back to provision desktops on demand.

Before configuring the View pool to provision all desktops up-front you should confirm you have the free space and I/O to do so. If it is a low number of VMs it’s probably not a big deal if you have lots of free space. If it’s a high number probably not a good idea to do it during I/O sensitive times even if you have the needed free space.